We are led to believe, we’ll see mass adoption of Augmented Reality when the technology improves; but really, getting the experience into people’s hands is the biggest challenge.

If you follow me on a little journey, of lots of twists-and-turns through AR history (over the last five years), you’ll start to see why…….

Being sensitive to the end user’s context is very important, but also timing too (leveraging new AR developments, riding market forces and navigating changing industry standards)

And as a bonus, if you’re in the business of building immersive experiences, I’m sure you’ll gain a much better understanding of the industry, after looking into AR’s past.

For example, I remember when I was learning the photo editing software photoshop. I took classes and one of my fellow students had worked in the print industry for over 20 years. Anyway, he was able to tell me all about the standards of his industry – eg colour channels RGB CMYK etc – that photoshop was based up. All of a sudden the software made sense and my learning accelerated!

Understanding where the industry has come from, can help you understand it now, and where it is going………

Web-Based Augmented Reality Before “WebAR” Was a Thing

Early in 2015, I had the ambitious plan of automating my day job: rendering proposed architecture in its intended surroundings; “what it will look like when it’s built” essentially, aka photomontages, or even – when pokemon was released – Augmented Reality!

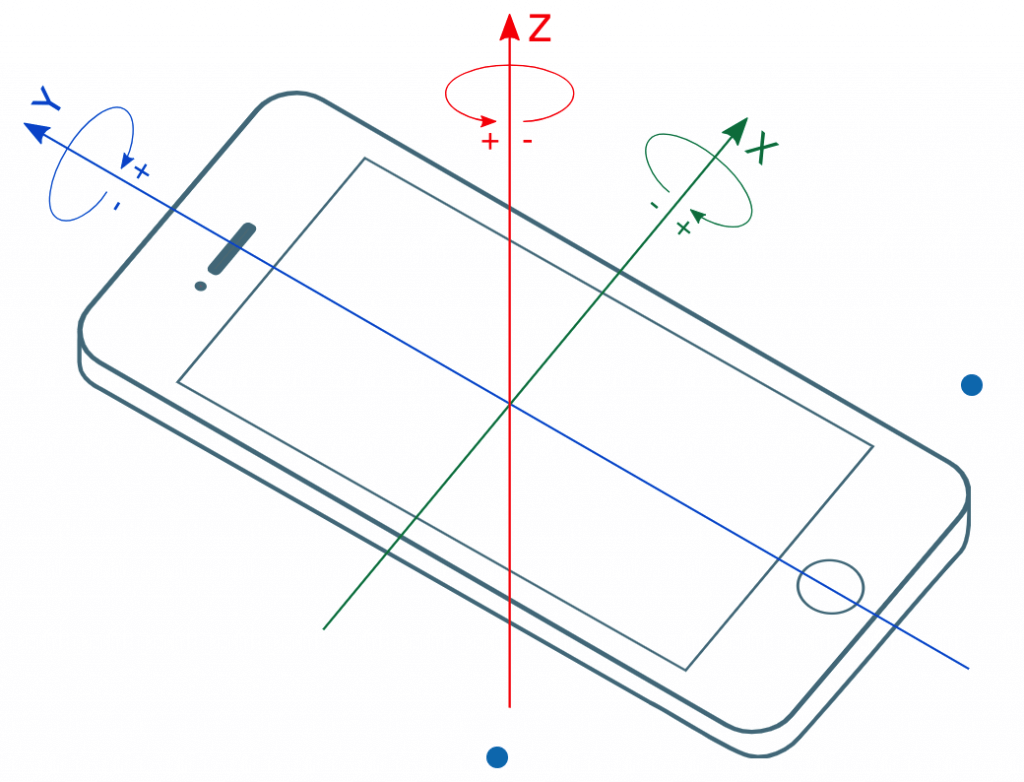

Anyway, it was the time when apps were really “cool”, so naturally, I wanted INsitu to have one; especially since that would afford us the opportunity to take advantage of hardware onboard mobile devices – such as the accelerometer and gyroscope – to provide a really accurate, convincing, and to-scale AR experience.

Well, that was not to be, because our clients were not using mobile devices. They were primarily selling their products, through their websites, on the web. So the web is where the Augmented Reality Experience had to be.

User Experience (UX)

Without the said mobile hardware, we equipped the application with an interface to assist users in augmenting their reality:

Immersion & Realism

Making a truly immersive AR experience is about blurring the lines between the physical and digital worlds, so we were keen to assimilate the 3D models (client’s products) in the photographic context (user’s environment) as convincingly as possible. Therefore, we utilised GPS to identify the location and realistically render the weather & environment, accordingly; utilizing the Dark Sky Weather API. Here’s one we made earlier:

Even with these rendering improvements and the added immersion factor; we were still keen to improve the user journey to provide the best UX possible. Therefore, we investigated how we could still take advantage of the said mobile hardware, even though the solution had to be web-based. Designing a web application aka Progressive Web App (PWA) was the answer

Mobile Hardware & Sensors and Industry Standards.

The Worldwide web consortium (W3C) publishes standards, that modern browsers follow, including APIs for accessing Gyroscope data through the web browser; therein, providing app developers a means to get the orientation of mobile devices (with in-built gyroscopes).

The mobile’s Gyroscope data, which provides the device’s orientation, could be used to automatically set the correct perspective and hence the scale of the INsitu AR experience.

However, although the WWC decided to liberate the gyroscope, their attempt to set the camera free too, was only being supported experimentally on some browsers such as opera.

Anyhow, due to client demand, INsitu started to explore how to leverage 3D data for CAD automation and customer customisation, and we took a break from developing our AR, but nevertheless kept an eye on developments ……

Depth Mapping & Simultaneous Localisation and Mapping (SLAM)

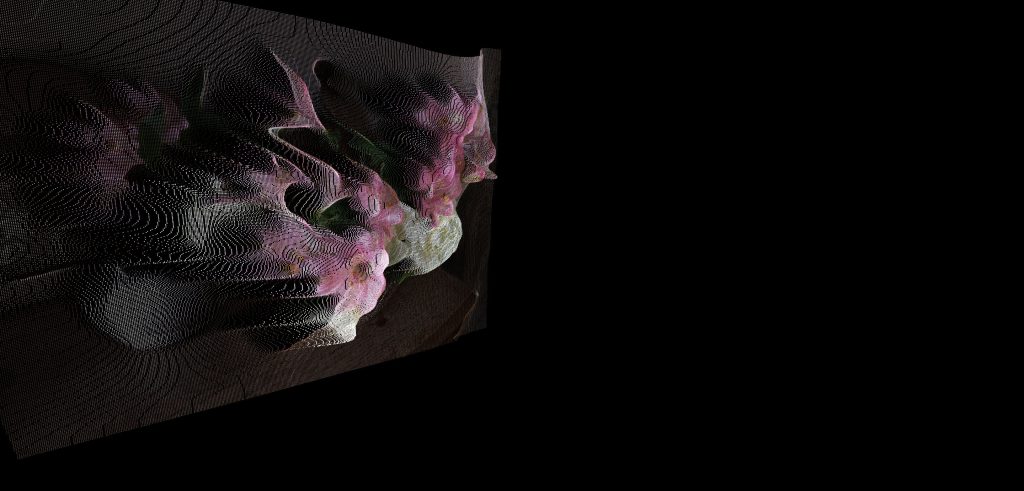

In 2016, 3D scanning was not a new concept but bringing the technology aboard a consumer mobile device was challenging. Google released an experimental feature called lensBlur, which by the way of a workaround – moving your phone in a line and taking multiple photos – did indeed create a 3D point cloud, utilising photogrammetry. Here’s one I made earlier of a bouquet of flowers.

Photogrammetry captures depth data – how far away surfaces are from the camera – and stores this information pixel by pixel in what’s known as a depth map, where each pixel is a different shade representing this depth data which is then used to create this 3D point cloud.

This was a nice precursor to the mother of all computer vision and 3D point cloud experiments: google project tango. Though now, replaced by google’s ARcore, the effort’s lasting legacy, used in webAR experiences today is Simultaneous Localisation And Mapping (SLAM)

SLAM is a concept in spatial computing & computer vision that allows you to map an environment in 3D and as you do locate yourself within it.

I did indeed own a Lenovo Tango-enabled phone and captured the below footage

The technology is taking advantage of the gyrospcope to orientate the cat correctly at the right scale automatically. Furthermore, computer vision algorithms are detecting surfaces within the space which are included in the active 3D scanning mapping process of the room, so that the cat is able to successfully jump up on to the sofa to retrieve the fish.

The device’s sophisticated time of flight (ToF) camera captures the 3D point cloud of the room. The time it takes for light to leave the camera, bounce of a surface and come back (hence Time of flight), is used to ascertain the distance travelled, which is then used to build a depth map for the 3D point cloud.

Impressive, but the likelihood of all mobile vendors, shipping their devices with sophisticated ToF cameras, in 2017, so that AR could achieve mass adoption, was probably unlikely. Even now ToF is not widely adopted in modern mobile devices.

Infact, the next big development in AR was the AR frameworks, ARkit & ARcore; which made use of the new “dual cameras” being included in mobile devices. They weren’t as hardware intensive as ToF cameras, and therefore they were much more practical for the mobile vendors to ship in their devices: two cameras spaced apart from each other provide the imagery needed to infer the depth data and generate a corresponding point cloud. An automatic process, and one producing much better results than google’s lensBlur feature (which was a sort of “hack” using only one camera to provide this provision)

AR kit & AR core

ARkit (apple) and ARcore(android) took away a lot of the heavy lifting involved for developers creating AR applications for ios and android. Also both Apple and Google made AR experiences a more integral part of their devices that developers could build for. Developers could now develop AR apps more easily, quickly and see the effects be more far-reaching. They needn’t be experts in AR either because the frameworks simplified complicated computer vision coding into simple functions developers could easily utilise in their applications.

The frameworks were a great development in democratising AR for all developers. Furthermore, the framework’s SDKs (software development kits) were useable within 3D focussed development platforms such as Unity, making development and app distribution a whole lot easier.

So now most people thought there’d be an avalanche of AR experiences, but not quite. Unless the AR experience was developed to be a part of google’s or apple’s native technology, through all the ways made available to you on their devices (which means an app needs to be developed to be downloaded from their app stores basically) then there’s no other way of bringing such an experience to users on these mobile platforms.

And the problem is, there is friction associated with getting users to download apps, especially when they are only intended to be used once, which is typically the case with AR applications delivered to promote campaigns

However, there is an alternative: deploy your AR experience with WebAR. Then your web based AR application can be launched directly from the web, more seamlessly.

WebAR

Now in 2021, in comparison to 2015, AR on the web has been explored much further and as such there are several AR frameworks for the web known as WebAR. They make the process of developing AR apps, much easier, just like ARkit & ARCore.

One such framework – that made use of their SLAM engine along with ARkit & ARcore – was developed for developers, developing apps in unity (for any mobile platform); and then next, they ported their slam engine into Javascript (the programming language of the web) so that their platform and framework could be used to create web-based AR apps. Therein, mobile users could access these experiences – which are complete with everything they’d expect from a modern AR app – but directly from the web, without having to download an app. Brilliant!:

Build with Amazon Sumerian | S3 E3 – “Spider-Man: Into the Spider-Verse” AR Experience with 8th Wall

WebAR is optimal for this use case – campaigns – which my sources tell me is where much of the demand is currently coming from. You see marketing and advertising people need to sell products & services, and what better way to do so than to deliver an experience that is more immersive than a traditional means – to captivate and inspire consumers. Such professionals have embraced storytelling as a powerful means to invest consumers in brands – aka brand storytelling – and now they have a new powerful medium to do so – immersive experiences.

However, it would be a awfull shame, if the power of AR was only leveraged for sales & marketing purposes, as AR experiences to be thrown away, when the campaign is over. Okay, I’m exaggerating. There are numerous AR experiences out there that are used over-and-over again, which users will happily download from the app store, or just webAR experiences they continually revisit.

However there needs to be more

How?

Well, AR needs to become more of a utility, something that you rely on and need for your daily life, like any other technology that has achieved mass adoption.

But again, how?

The Next Phase Of AR Adoption?

Now in 2021, there are many examples of Augmented Reality being done well.

Good Augmented Reality experiences superimpose digital content realistically into the physical world.

However, these AR apps, know little about the real-world environment into which this digital content is being superimposed.

Computer vision is being used for surface detection and in some cases building maps of the environment as part of SLAM, but more can be done

Fundamentally, the gap between the physical and the digital worlds needs to get smaller and smaller.

And what better way than to build up a mass world-wide spatial intelligence accessible to all – an open AR cloud.

More on that next time.